The Rise of LLMs

Large Language Models (LLMs) have been around for years. The mainstream launch of ChatGPT and OpenAI's various models in 2022 became the first time that many people interacted with LLMs. It didn't take long for them to become a major part of everyday life.

Since the rise of ChatGPT, other competitors have staked their claim in the AI provider space. Google released its Gemini models.01 Anthropic launched Claude Sonnet (among others), which has become a core model for developers.02 OpenAI released o1 for reasoning and DALL-E and Sora for image and video generation.03

LLMs are being increasingly integrated into all facets of life. You may have used one to place your order at a fast food drive-thru. LLMs power customer service voice bots.04 Models are even being built into humanoid robots for use in manufacturing and home assistance.05

Whether you're looking to leverage AI to streamline operations, you're founding an AI startup, or you're integrating AI into an existing tech stack, understanding the basics of AI economics can help drive sound business decisions.

Our goal is to help you understand some of the basics to kickstart your journey. Let's get started!

What is an LLM?

An LLM is a mathematical model that is built to mirror a human brain — thus the term Artificial Intelligence.

LLMs aren't just built, they're trained. Models go through a training process where they are exposed to a wide array of data — websites, books, images, and more. LLMs use math operations to find relationships between variables in the data.

For example, certain words are commonly found together. If you read "The United..." your mind most likely jumps to "States" or "Kingdom". Your brain has been trained on data and experiences throughout your life to predict the words most likely to appear next.

Similar to a brain, a model's collective knowledge can be called upon. This is called inference. LLMs can be given a specific request, and based on their training they can predict the most probable response.

It's important to note that "most probable" doesn't necessarily mean "correct". Because LLMs rely on mathematical relationships, sometimes they can give a response that is likely, but false. This is called hallucination.

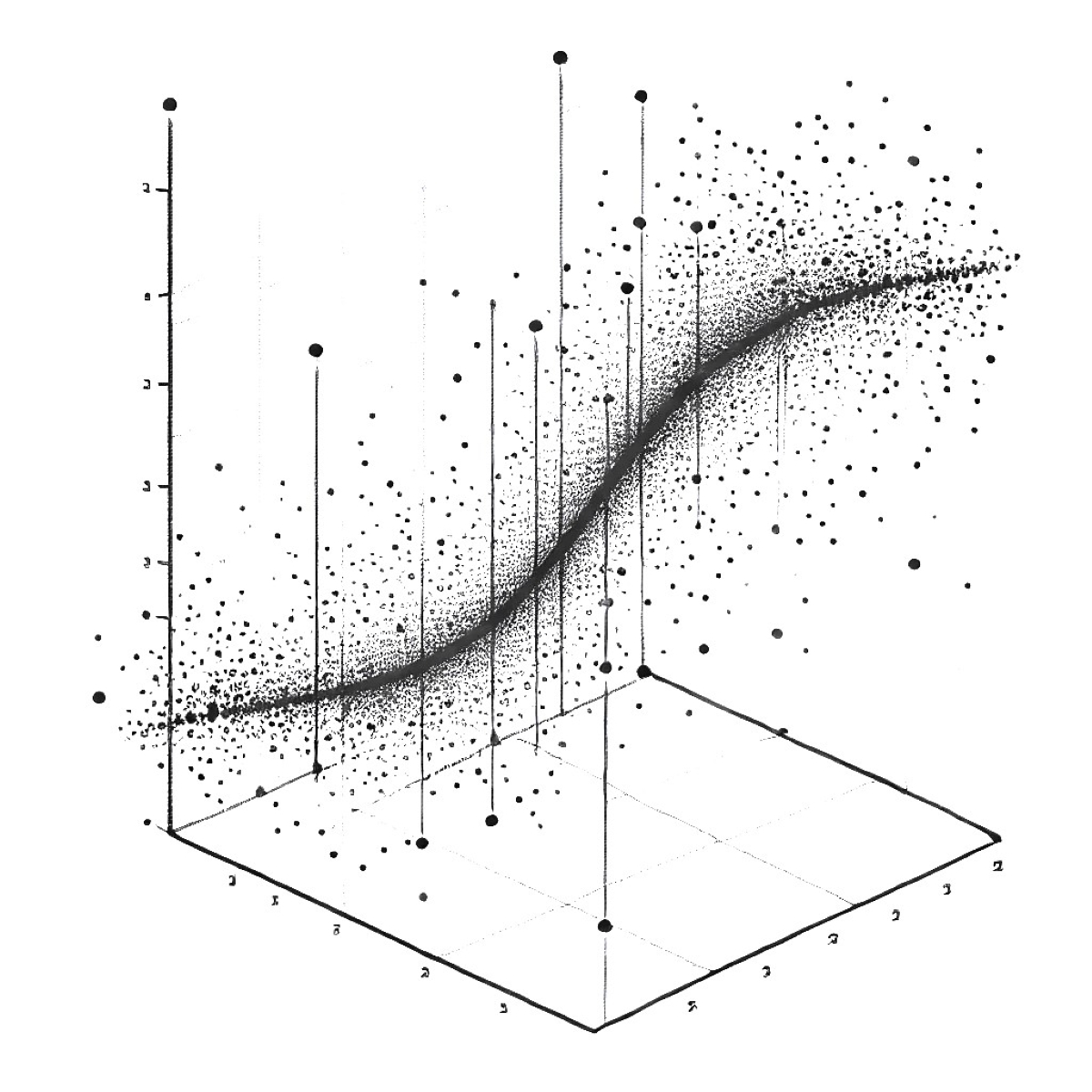

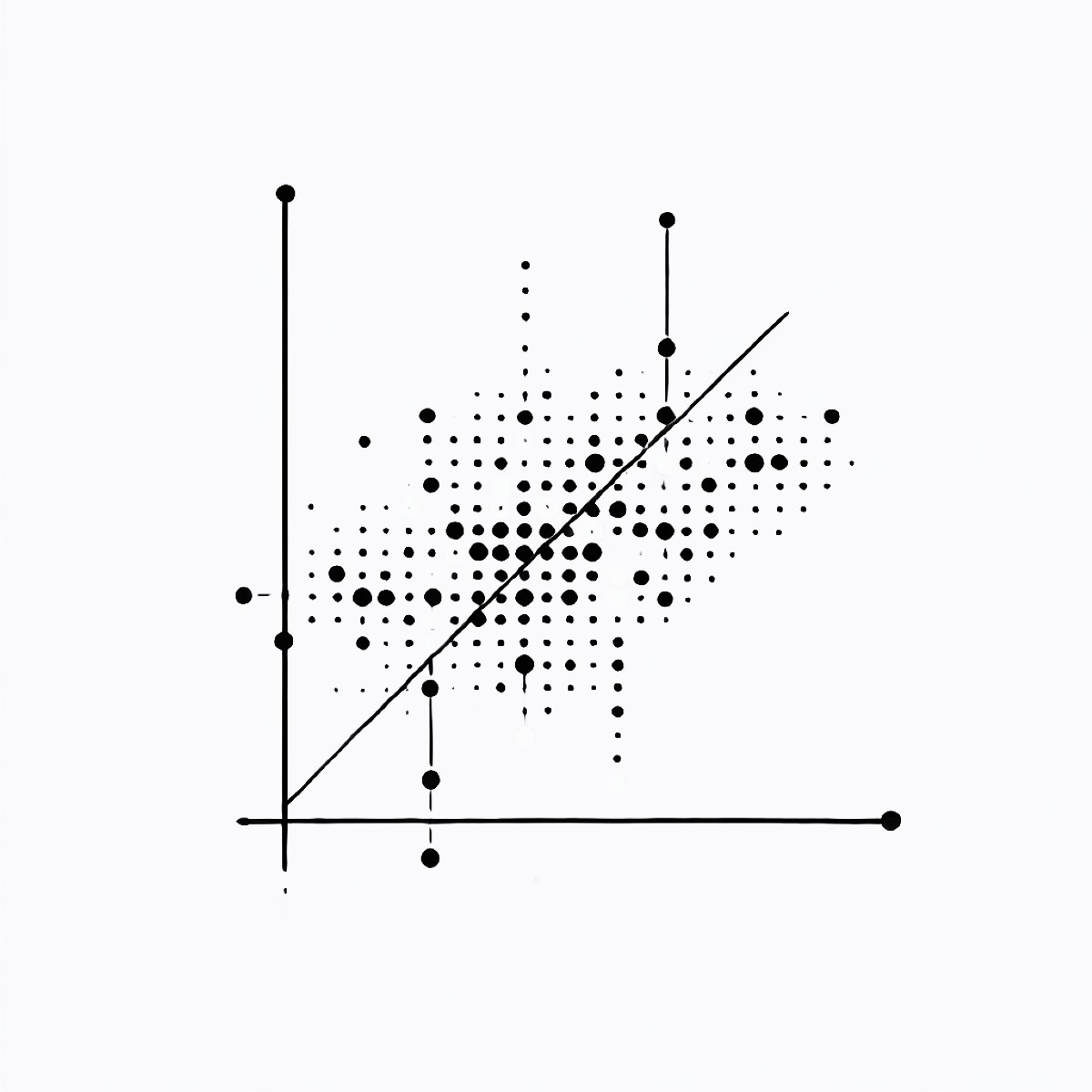

One way to make LLMs more tangible is to compare them to something you likely learned long ago.

y = mx + b

In school you learned that the formula above is the equation of a line. If you have a dataset with real observations, you can use matrix algebra to determine the slope (m) and intercept (b) and draw a line of best fit for that data.

With your line of best fit, you can use input data (x) to predict output data (y). It doesn't mean you will be 100% correct, but your equation will produce the most likely output based on the data it was trained on.

Comparing LLMs to the equation y = mx + b is indeed a bit of a stretch, but there is a loose parallel in how both processes operate. In essence, LLMs undergo a training process where they learn patterns in the data. As information is fed into an LLM, it begins by breaking that data into smaller units, called tokens, in a process known as tokenization.

These tokens represent the basic building blocks the model processes. Tokenization is a crucial step because the model cannot operate on raw text directly — it requires the data to be transformed into a format (tokens) that it can understand and manipulate.

Once training is complete and statistical relationships are determined, the model can be inferenced in the future. When you ask a question to ChatGPT, the model tokenizes your input, runs the tokens through its model, and then returns the most probable output. Hopefully it is what you're looking for!

Tokens are important! Besides being the atomic unit of LLMs, in many cases tokens are the unit of measure you pay for, especially for scaling startups.

Wrapping Up

Hopefully you're leaving with a better understanding of LLMs and how they work. This is foundational knowledge that becomes important if you are building with AI.

In our next topic — Tokens, GPUs & Product Cost — we'll dive deeper into token pricing and how to think about the cost to provide the products you're building.

Later, we'll cover Model Selection and how to make sense of the various models available for your products.

If you're seeking more help understanding how your AI investments impact your business strategy, please contact Piton Ventures to discuss how we can help your organization.

Let's build something great together.

Phone

+1 (907) 952-6599

Give us a call and chat directly with our friendly team. We're always happy to answer any questions.

Social Media

Connect with us on social media to see key updates and industry insights.